In my experience, entropy as an idea is generally misunderstood. Like many, I had a gut level understanding of “entropy is disorder”. It’s easy to misapply that intuition and draw wrong conclusions about areas far removed from physics (business, economies, cultures, etc.) Remember: thinking in analogies is dangerous?

So, I decided to dive deeper into entropy and here are my notes on the topic (as a series of easily digestible tweets).

1/ A short thread on ENTROPY, and its misapplications

2/ First and foremost, know that there are two different things that are called entropy: one is thermodynamic entropy and the other one is information entropy. Whenever someone is talking about entropy, ask which one.

3/ Thermodynamic entropy: it started with Carnot and Clausius, who defined it as the amount of energy/work absorbed by a system per unit of temperature

4/ Actually, more precisely thermodynamic entropy was defined at a constant temperature and in a reversible process. Roughly it translated to how much energy can go in the system without raising its temperature

5/ This definition was ill-defined and hard to measure, plus there were unexplained phenomena such as when two systems were brought in contact their combined entropy was always larger than individual entropy. (You may recognise this as the second law of thermodynamics)

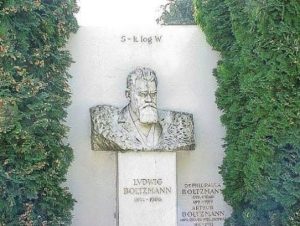

6/ The second law was explained intuitively by Boltzmann who has his famous equation written on his epitaph (S = k log W)

7/ Boltzmann defined entropy as a statistical property of atoms. Put simply, thermodynamic entropy is the log of the number of states of the system with the same temperature.

8/ Temperature is simply the average energy of atoms, and there are multiple ways energy is stored in atoms: kinetic energy (when they’re moving), vibrational energy, covalent bonds, etc.

9/ The colloquially used phrase: ‘entropy is disorder’ is misleading because even most perfectly ordered things (say diamond) have entropy. Diamond’s atoms are constantly vibrating and jostling, and for all those vibrations, diamonds temperature is constant so entropy is defined

10/ Temperature is a macro measure. When you put mercury temperature, the level rises because mercury liquid expands (and it expands because of added kinetic energy from the system whose temperature is being measured)

11/ When we read 100 degrees on the temperature scale, we are measuring the average energy of mercury’s atoms and via that average energy of measured system’s atoms. We are hence relying on averaged value (temperature) of specific microstates of the system (different atoms and their energy)

12/ In this way, thermodynamic entropy is a relative measure. It not just depends on the system being measured (more microstates or degree of freedom = more entropy), but our interactions (or knowledge) of it.

13/ If we could take a perfect photo of a system with knowledge of energies of all the system atoms, it’s entropy will be zero because our macro interaction (knowledge) includes all the systems micro states

14/ It’s only our macro level interactions that gives rise to entropy. So rather than saying ‘entropy = disorder’, a better thumb rule is to say ‘entropy = uncertainty’

15/ This thumbrule also explains the second law of thermodynamics, or why entropy rises or remains same in closed systems but never decreases

16/ It’s actually wrong to say that entropy never decreases.

Yes, in general, total entropy rises because when systems interact with other systems, their combined microstates are much more than their individual microstates

17/ As an example, the number of combinations of two decks of playing cards is greater than sum of combinations individual decks.

This is simple combinatorial math.

18/ So when system 1 is in contact with system 2, our ‘uncertainty’ of exact microstate of the system for measured macrostate increases because there are simply more number of microstates.

19/ However, entropy can decrease as it’s a probabilistic idea.

As you’re shuffling two random decks cards, just by pure luck, you may find they are perfectly ordered. In unlikely cases, entropy decreases (and many subcellular phenomena uses this fact to function)

20/ In general, however, entropy rises because humans deal with macro-level phenomena (trees, food, jewellery) while on the atomic level, as systems interact, they tend to increase microstates (and increasing our conception of entropy)

21/ It’s worth remembering that, unlike energy, there is no such thing as the entropy of an atom.

Entropy is a statistical measure of a combination of atoms.

22/ For life to exist, its constituents have to remain bound to each other in some fashion. It has to actively work against the statistical nature of intermingling systems.

23/ I see life as a statistical pattern that has found itself immune to intermingling with rest of the world by consuming certainty (low entropy sources; sunlight with photons of a particular frequency) and redirecting uncertainty away from itself (our body temperature)

24/ That was thermodynamic entropy. Now, information entropy as defined by Shannon.

25/ Thermodynamic entropy is defined in the precise sense of its relation to temperature and average energy (of atoms).

Information entropy is defined in the context of probability distributions.

26/ It’s defined as: given a probability distribution, whenever we sample a data point, how much on average do we learn about underlying distribution. It’s also called ‘surprisal’, that is, given a probability distribution, how much on average should we expect to be surprised.

27/ For probability distributions that are sharply peaked (that is, higher certainty) entropy is less because we don’t expect to learn much new when we sample a data point from that distribution (because we already know it’s likely to come from a particular range)

28/ For uniform probability distributions where all outcomes are equally likely, entropy is higher because we don’t know what value is going to come next from that distribution (hence we expect to be surprised)

29/ In a way, information and thermodynamic entropic measures are connected by ‘uncertainty’ in the underlying microstates of a system.

30/ For thermodynamic entropy, it’s log of the number of states that constitute a temperature.

For information entropy, it’s log of the number of states any system can be corresponding to any macro-description of states.

31/ In general, I’m very skeptical of misuse of these precisely used ideas in domains such as business, culture, economies.

There are interesting connections for sure, but I hope understanding these measures precisely will help us invent better measures for other domains

Join 200k followers

Follow @paraschopra