Let’s imagine Artificial Intelligence, but in reverse. In such a world, humans are equivalent of machine learning algorithms (like deep learning) and some aliens (or our simulation overlords) feed labeled information from their world into us and ask us to “learn” the mapping between the inputs and the outputs. In all likelihood, their world will be incomprehensible to us (as it would have a very different nature than our world). Hence, whatever data is fed to us will seem random (as, in our world, we’d have never come across it before).

Because the data will look random, it’ll essentially be white noise. In this light, the task given to us seems impossible: how do we extract meaningful patterns from white noise?

Having zero background knowledge about the alien world, you’d not assume anything and hence will require lots of data points tease out any correlations present in the dataset. For example, maybe, when aliens mapped their data to our 2D visual field, the real determiner of whether it corresponds to a specific label or not is a combination of the 5th, 99th, and 213th pixel. Or, maybe when you save this white noise into a .wav file, it corresponds to a sine wave (which is the intended label). Or maybe, just to mess with us, aliens have simply fed us truly garbled data.

The difficulty in finding patterns in the alien dataset is that, because we know nothing about the alien world, we’d need to have an infinite number of such hypotheses on what constitutes an input -> output mapping. This mapping could literally be anything and a few examples of data won’t suffice. We’d need millions of examples and a lot of patience to tease out patterns.

For machines, ours is an alien world

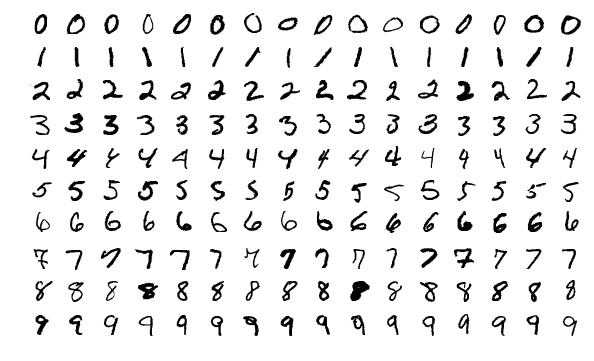

Now imagine what it would like to be a deep neural network trying to label images of handwritten digits. The MNIST dataset (see below) is one of the most famous datasets in machine learning, and, apparently, modern algorithms have achieved a “near human accuracy” on predicting which image corresponds to which digit.

But getting to that “near human accuracy” took 60,000 examples (the size of MNIST database) fed into the algorithm. A human child can start recognizing digits with only a few examples. Hence, the question arises:

Why is the machine algorithm so inefficient as compared to a human child?

The key to understanding the reason behind this inefficiency is to appreciate that what looks like a “1” to us looks like white noise to an algorithm. Algorithms have absolutely no idea about the nature of our world.

Just like an alien data set will likely look random and incomprehensible to us. To an algorithm, the following two images are exactly the same because it knows nothing about our world.

The secret to quick learning in humans: evolution

Evolution explains why humans are able to learn generic patterns using only a few examples. We haven’t popped into existence ex nihilo. Our existence is a result of several billion years of evolution where organisms who “knew” more about the world survived better than the ones who had no clue.

For example, a deer who didn’t “know” that a moving predator (cheetah) will soon reach close to it died pretty soon. Similarly, a deer who didn’t “know” that broken tree branches fall down also died pretty soon. On the other hand, the deer who had the “hunch” of running away from moving objects learned that moving things collide and hence survived.

In this way, the process of evolution encodes information about the world in an organism at a very deep level. That is why we do not have to “think” and solve for Newton’s law of motion to know that a flying spear can hit us.

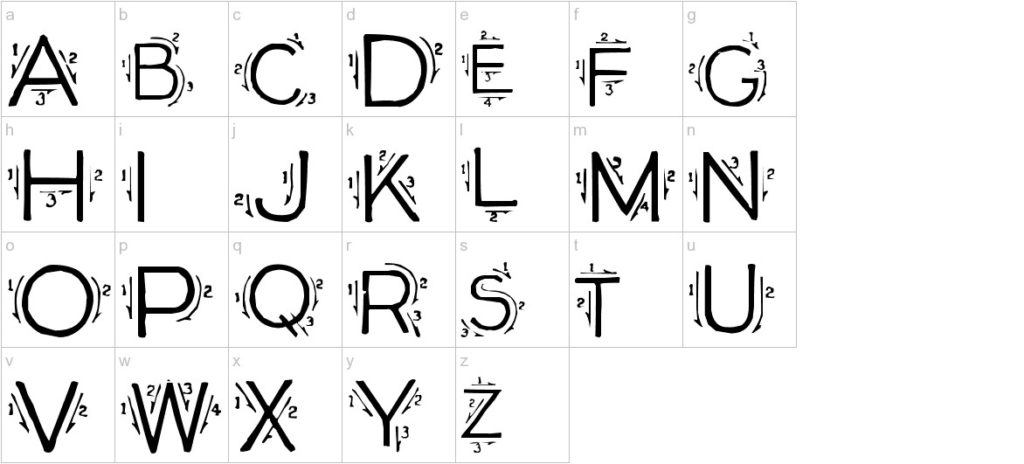

In case of handwritten digits, it is our background knowledge that helps make learning from a few examples possible. The first piece of knowledge is about how human hands work. While writing, rather than pushing the pen in random directions, we always make strokes. It’s due to how our hands’ physical structure has evolved. It’s a fact that most (all?) written languages have characters which require stroke-like patterns.

If you know that fact about human hands, it’s far easier to guess what strokes constitute what digits, rather than what exact sequence of pixels constitute what digits.

On the contrary, a machine learning algorithm doesn’t know anything about human hands. For an algorithm with zero background knowledge, first, it has to learn that we use strokes. Then, even after learning about strokes, it has to learn that we use few strokes (say, less than five) rather than say a million strokes to write digits.

So it’s no surprise that machine learning algorithm require such a large training data set to make any sense of our world.

Why machine learning algorithms beat grandmasters at Chess

Our hands evolved for precise tool handling and hence we use similar patterns while using a pen, hence we use strokes for writing. Our brains evolved to help us navigate social situations. If you cast abstract logical problems as social situations, our brains solve them faster.

This explains why machine learning algorithms are much better than humans at tasks that are more recent in our history (chess, detecting cancer, etc.) These problems have a structure that’s very different than what’s found in nature.

The sample space of valid chess moves is mathematical and has no analog in the world where humans evolved. Though humans have a leg up when it comes to having background knowledge that there are such things such as valid or invalid moves, but beyond that, unlike recognizing handwritten digits where learning is “automatic”, humans have to master chess through years of practice. So it’s no wonder that a machine learning algorithm can practice a lot more and get much better than humans at chess.

Next time you wonder why a machine learning algorithm can’t do something that occurs to you as “common sense” (such as telling jazz and blues apart, or laughing at a joke), know that evolution has taken billions of years to embed in you a “gut knowledge” about these things while machine learning algorithm starts from ground zero. Your brain is not just a computer. It’s a computer with a huge amount of background knowledge about physics of our world and the social structure of human groups.

Join 150k+ followers

Follow @paraschopra